Have you ever watched a video and wondered, "How fast is that object moving?" Maybe it's a car speeding down the highway, an athlete sprinting, or even a celestial body moving across the night sky. Figuring this out might seem like something straight out of a CSI episode—especially when you have no camera calibration, no known object sizes, and zero reference measurements.

But here's the exciting part: with modern computer vision techniques and some AI magic, we can actually get surprisingly accurate speed estimates from ordinary videos.

The Challenge: Speed Without References

Let's start with the basics. Speed is simply distance divided by time. In videos, time is straightforward—it's just the frame rate. But distance? That's where things get tricky. How do you convert pixels to meters when you don't know how big anything in the frame actually is?

If you've tried the standard approach of using known objects for scale (like cars or smartphones), but don't have any recognizable items in your scene—don't worry. We're about to dive into some advanced techniques that can help.

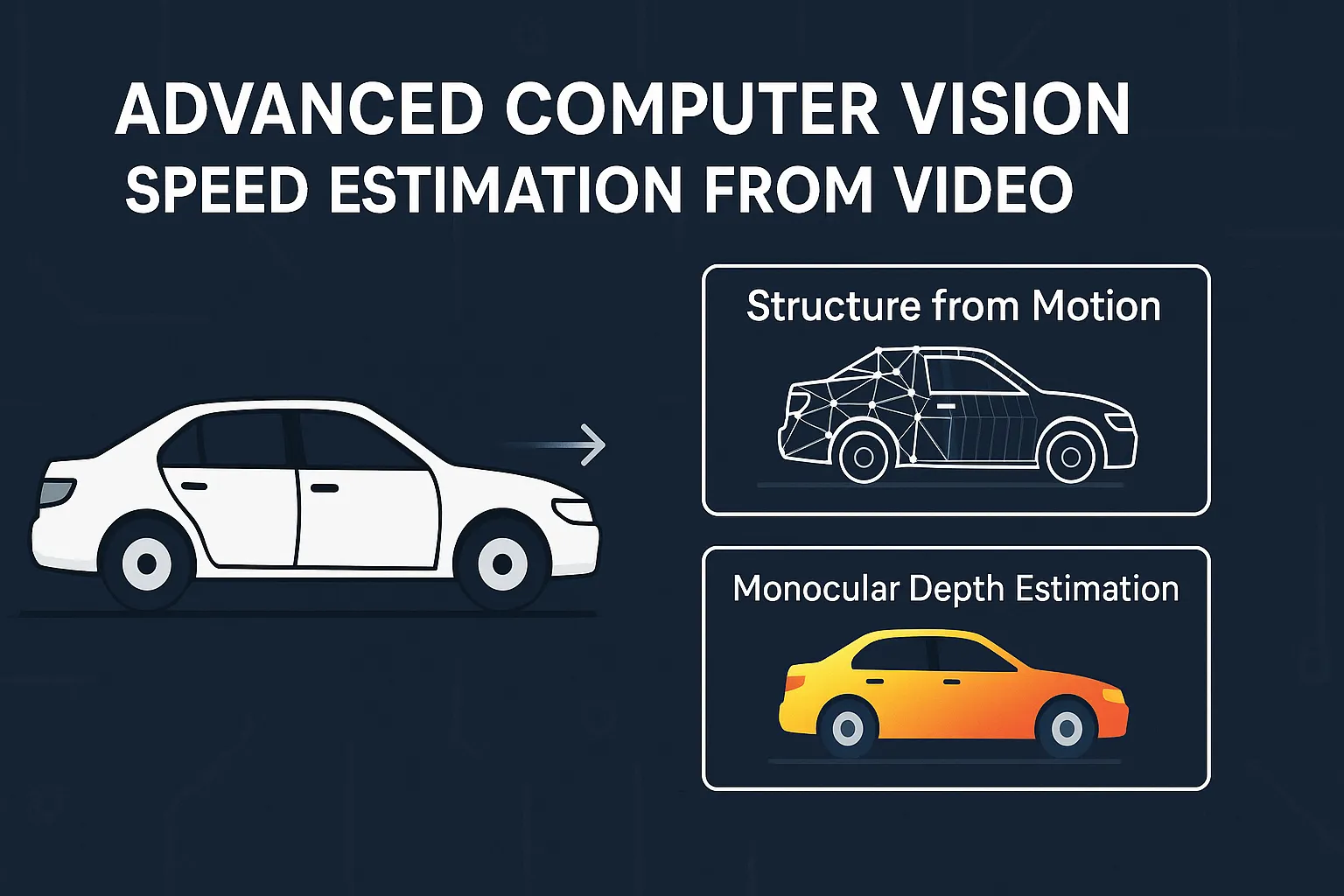

Structure from Motion: Building 3D from 2D

Have you ever been amazed by those 3D models created from drone footage? That's Structure from Motion (SfM) at work, and it's one of our secret weapons for speed estimation.

SfM works like human vision. When you look at something from different angles, your brain combines those perspectives to understand depth. Similarly, SfM analyzes multiple video frames to reconstruct a 3D scene and track the camera's movement through it.

Here's how we use it for speed calculation:

- The algorithm identifies distinctive visual features across frames

- It tracks how these features move from frame to frame

- Using geometry, it reconstructs both the 3D environment and camera positions

- Finally, it calculates how far objects moved in this reconstructed space

What I love about SfM is that it gives you a complete spatial understanding of the scene. You're not just tracking pixels—you're reconstructing reality.

Monocular Depth Estimation: AI's Depth Perception

What if I told you AI can now look at a single image and predict how far away everything is? This might sound like science fiction, but it's exactly what monocular depth estimation does.

These neural networks have been trained on millions of images with corresponding depth information, and they've learned to recognize subtle visual cues that indicate distance—things like texture gradients, object overlap, and perspective.

For speed estimation, this approach is incredibly powerful:

- Run a depth model (like MiDaS or Monodepth2) on each frame

- Track your object of interest across frames

- Measure how its estimated depth changes over time

- Convert this to speed using the frame rate

I recently used this technique to analyze wildlife movement patterns in a nature documentary, and the results were fascinating—we could determine hunting speeds of predators without disturbing their natural behavior.

Choosing the Right Approach for Your Scenario

Both methods have their sweet spots. In my experience:

Structure from Motion works best when:

- Your scene has plenty of distinctive visual features

- The camera is moving (or multiple viewpoints are available)

- You need a complete 3D understanding of the environment

Monocular Depth is ideal when:

- The video quality isn't perfect (perhaps blurry or low-resolution)

- You need frame-by-frame distance estimates

- The scene lacks texture or distinctive features

And here's my favorite approach—why not use both? By combining SfM's structural understanding with the frame-by-frame insights from depth estimation, you can create a robust hybrid system that overcomes the limitations of each individual method.

Practical Implementation Tips

If you're excited to try this yourself, here are some practical starting points:

- For SfM: COLMAP is an excellent open-source package. It has a bit of a learning curve, but the results are worth it.

- For Depth Estimation: MiDaS is my go-to model, available through PyTorch. It's relatively fast and gives impressive results even on consumer hardware.

- For Object Tracking: YOLOv8 combined with DeepSORT works wonders for keeping tabs on objects across frames.

I always recommend starting with a test video where you actually know the speeds (perhaps film something while using a speedometer). This gives you a baseline to calibrate your approach.

Real-World Applications

The techniques I've shared aren't just academic exercises—they're being used in fascinating ways:

- Traffic monitoring systems that don't require expensive radar equipment

- Sports analytics to track athlete performance

- Wildlife research without intrusive tracking devices

- Autonomous vehicle development

- Forensic video analysis

I'm particularly excited about the environmental applications—being able to study animal movement patterns without disturbing their natural behavior is a game-changer for conservation efforts.

Wrapping Up

Speed estimation from video without prior information is no longer science fiction—it's science fact. Whether you're using Structure from Motion to build complete 3D reconstructions or leveraging the power of neural networks for depth estimation, these techniques open up incredible possibilities.

And the best part? Many of the tools are open-source and accessible to anyone with basic programming knowledge and a decent GPU.

What would you use these techniques for? I'd love to hear your ideas and answer any questions in the comments below. And stay tuned—in my next post, I'll walk through a complete Python implementation using these methods!